Opinion: It’s Twitter’s job to deal with its abuse problem, not its users.

Andrew Rowley and Shauna Madden, PR Account Managers share their view on Twitter’s new experimental updates.

Over recent years Twitter has consistently come under fire from its own users who claim the platform does very little to handle the abuse that is rife on the platform. Even back in 2015, a widely reported study showed that 88% of abusive social media mentions occur on the Twitter platform. Whilst anonymous abuse or ‘trolling’ is seemingly on the rise, only a tiny fraction of online abuse happens on Facebook or other social media which makes this, mostly, a Twitter-specific problem. So, when Twitter presented at the CES gadget show in Las Vegas on Wednesday, it was no surprise the issue of abuse was front and centre in their presentation.

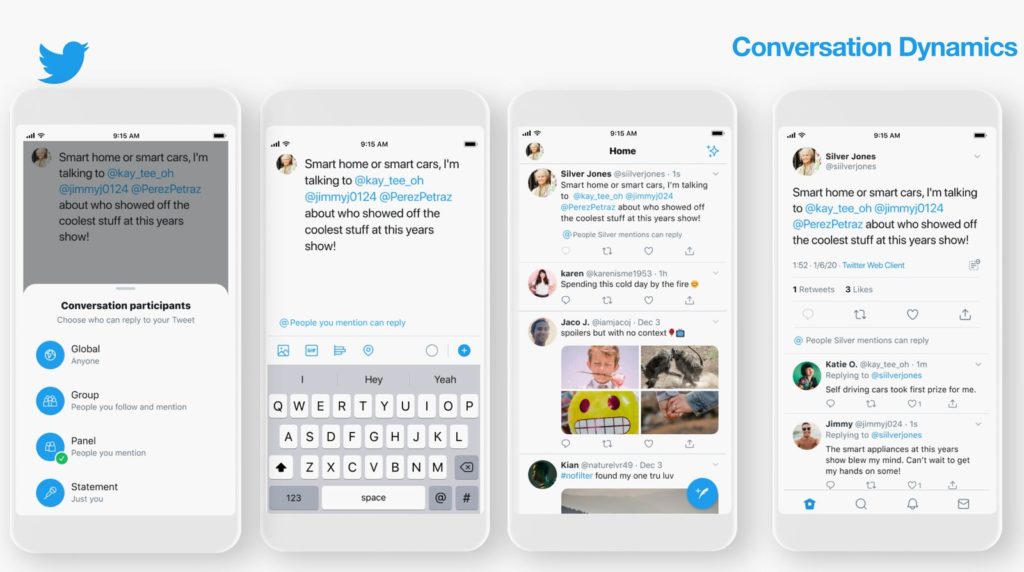

At the show, the social giant addressed the elephant in the room and announced that it would be experimenting with a fundamental change to the platform, which has been designed to bridge the gap between having a totally private account (where only the people you allow to follow you can see or interact with your content) or a completely public one – where anyone, anywhere, can see and interact with your tweets. That change is to roll out slowly over the next few years and will give the author power over who can reply to their tweet in four different ways: Global, Group, Panel and Statement.

- Global: Anyone and everyone can respond to your tweet

- Group: Only your followers and anyone you mention can respond to your tweet

- Panel: The people mentioned in the tweet are the only ones who can respond

- Statement: No one can respond

Image credit: Twitter

Many of those commenting on the changes have aligned this change with Facebook’s privacy settings, which have long allowed users to select who sees their content on a post-by-post basis, but no matter whether there are platforms which work in a similar way out there currently – this is a fundamental alteration of the platform, and is set to create huge waves in the way that users experience Twitter.

Perhaps the most concerning change to the platform is the ‘Statement’ function. There’s no doubt about it; we live in divisive times in which debate has become a natural discourse. It can be healthy and constructive, and it’s important for society. The statement function takes away the discussion, and provides users with no opportunity to respond – across the board. Which means that even those who set to educate and inform, rather than attack, are still not able to add value in response to a tweet.

This sounds harmless enough, until you consider the spreading of fake news and misinformation. The statement function will allow people to say what they want, unchallenged, without facing correction or consequence. If Twitter follows their trend of rolling out to verified accounts first, this might give some incredibly influential people the ability to have an unchallenged voice above a crowd who don’t have the opportunity to see other world views as they currently do. It raises some important questions. Will Twitter regulate these tweets? Will they start to adopt a methodology like Facebook, where unverified news items are penalised within the algorithm? There’s no plan for this – or at least if there is, they’ve not shared it yet – and that’s a concern.

Twitter has chalked the changes up as a response to the complaints of abuse; giving users control over who can and can’t interact with them. The ‘group’ and ‘panel’ functions allow people to select users they know and trust to participate in a conversation and eradicates the risk of an unwanted user jumping in and disrupting the discussion. I expect this will be welcome news to those who suffer at the hands of internet trolls, and those who find themselves continually involved in debates on the platform.

However, by doing this, the message is clear: Twitter is putting the onus on its users to deal with the trolls and bullies that lurk on the platform. Instead of removing the problem accounts, Twitter’s support team can now default to showing people how to limit their reach to avoid the potential of these troll accounts interacting with them. This is a direct juxtaposition to the purpose of the platform, which is truly about seeing people from a multitude of backgrounds come together to form discussion; whether it’s about politics, culture or memes. It’s true that not all of the conversation has been positive or even intelligent, but we cannot ignore that the platform set out and has managed to grow a culture of community and connectivity which spans global boundaries.

We know that trolls can overshadow the positive nature of the platform, by using the medium to attack, relentlessly and unapologetically, and we know that this causes real impact on people’s lives. It is for this very reason, then, that it shouldn’t be on users to handle this problem. Twitter should be doing more to remove the trolls and keep a closer eye on its users. We repeatedly see multiple far-right activists tweeting racist, sexist and anti-LGBTQ content and not facing penalty. We see presidents of countries inciting war crimes, and climate-deniers and anti-vaccine activists providing a plethora of fake news and doctored statistics in order to alter people’s worldview.

Twitter is out of it’s depth, drowning in the tides of this vitriol, and it can’t seem to get this right and remove these accounts. It needs to develop a structured approach to its handling of online abuse, and work to start getting it right more often than it gets it wrong. Limiting people’s interactions will reduce the volume, and it will certainly have positive impacts for certain users who are suffering at the hands of trolls, but it changes the functionality and purpose of the platform – and continues to let trolls roam unchecked, albeit with less people to target.

Twitter’s slogan is ‘it’s what’s happening’, but perhaps that will soon need to be changed to ‘it’s what’s happening in your world’. The difference might seem subtle, but in reality, it changes everything.

Twitter doesn’t need to remove people’s ability to debate and discuss. It needs to remove those who intend harm and spread hatred on the platform. It’s that simple.